How I rebuilt my UX process flow to one that actually works

Photo by Meghan McSweeney

Over the past few years, I've quietly rebuilt how I work as a senior designer at CarMax. Not because I wanted a trendy new framework, but because the traditional research to design to development process kept collapsing under the weight of real automotive retail complexity.

So I stopped trying to force my work into a generic UX process that took a long time to work through from discovery to design to development. I rebuilt everything around how the industry actually moves, how decisions really get made when customers are selling their vehicles, and how to maintain momentum when you're designing experiences that need to minimize data entry while maximizing trust.

This is the process I ended up creating. It is simple, tool-specific, and fast. It works equally well for instant offer flows, vehicle appraisal experiences, and ecommerce checkout patterns. And it fits the way I think as a designer who needs to ship in an environment where reducing friction can mean the difference between a completed sale and an abandoned cart.

Why the usual UX process kept failing me

The more I worked on automotive resale and ecommerce problems, the more the standard process felt disconnected from reality.

It wanted clean, linear research phases when we were already getting real-time feedback from customers abandoning checkout flows

It assumed stable requirements when certain priorities shifted weekly

It separated research insights from the data constraints and business rules that actually shaped what we could build

It asked teams to pause sprints for "proper discovery" when we were already behind on a feature that directly affected conversion rates

We design for people trying to sell their cars as quickly as possible, with minimal hassle and maximum trust, while our backend has to validate and sync appraisal data, assess vehicle condition, calculate offers in real-time, and handle edge cases like salvage titles and out-of-state registrations.

I needed something that worked in real conditions. Something that let me go deep on customer needs without getting buried in stakeholder reviews. Something that cut through the noise of competing priorities and pulled everyone into shared understanding quickly, even when "everyone" included merchandising, appraisal operations, pricing teams, and engineering teams managing complex vehicle data integrations.

What I wanted instead

When I stripped everything back, I realized I wanted a process that was:

Flexible enough to adapt when business rules changed mid-flight

Aligned early so engineering wasn't surprised by edge cases in week three

Collaborative without requiring everyone to sit in Miro for two hours

Structured enough to handle complex multi-step flows with conditional logic

Fast enough to show value before leadership moved resources to the next priority

I wanted less documentation theater and more clarity, more shared understanding. A way to work that matched the speed of automotive resale and ecommerce and still gave space for thoughtful design that respected both customer anxiety about data entry and business constraints around vehicle validation.

The process I ended up creating

This process has evolved across our ecommerce checkout redesigns, internal tool creation, and review processes that need to balance speed with accuracy. It gets everyone aligned quickly and dramatically reduces the friction that usually happens between research, design, and development.

Here's the flow, with the specific tools I use at every stage.

1. Front door intake

Before anything begins, I create a single-frame Miro board that defines the question, not the solution. I used to write long briefs that nobody read. Now I make one visual page that stakeholders can scan in 90 seconds that includes the following:

What customer outcome are we trying to shift (not what feature we want to build)

Who is impacted (people selling their cars, completing checkout, dealing with appraisal questions)

What decision this work needs to support

What constraints are real (technical, legal, operational, timeline)

What already exists that we can leverage

This becomes the anchor document for everything that follows.

2. Context sweep and synthesis

Before talking to users, I check what data exists so far in our database.

What I gather:

Fullstory session replays to watch real customer behavior

Support tickets and customer feedback from our site chat feature

Sales/back of houes associate feedback from internal tool channels

Data from my analyst partner. We look at drop-off points, funnel behavior, where people abandon flows

Prior research buried in PowerPoint decks across teams (I use Copilot to surface existing research that's not easily searchable otherwise)

Competitor flows from Carvana, DriveTime, Driveway

I dump support ticket exports, chatbot transcripts, and qualitative feedback into Claude and ask it to identify patterns, tag themes, and pull out specific customer quotes that illustrate friction points. I'm not using AI to replace analysis, I'm using it to do the tedious first-pass clustering so I can spend my time on interpretation.

This almost always uncovers redundancies, unnecessary cognitive load, and entire steps that can be removed.

3. Lightweight discovery (UserTesting, Ethnio, interviews)

CarMax customers are busy. They're trying to sell their car between work and picking up kids. They don't want to spend an hour on a call. So I design research that respects their time and gives me decision-ready insights fast.

What I run:

5 to 8 unmoderated UserTesting sessions (usually 5-15 mins max)

5+ 15 minute interviews via phone call with real customers in our target segments

Organized store visits for observational research at a CarMax store if the workflow involves in-person workflows

3 to 4 internal interviews with our back of house associates or customer care reps who talk to sellers all day

UserTesting now has auto-transcription, but I also run key sessions through Claude's transcription and ask it to pull out moments of confusion, positive reactions, and direct suggestions. I still watch every session myself, but having an AI-generated summary with timestamps helps me pull clips faster and share insights with stakeholders who won't watch full videos. I also use a lot of questions that have specific answers, so you can create the graphs really easily and export them via CSV for any powerpoint presentations.

This gives me fresh, contextual, decision-ready insight without slowing down the team or asking customers to do homework.

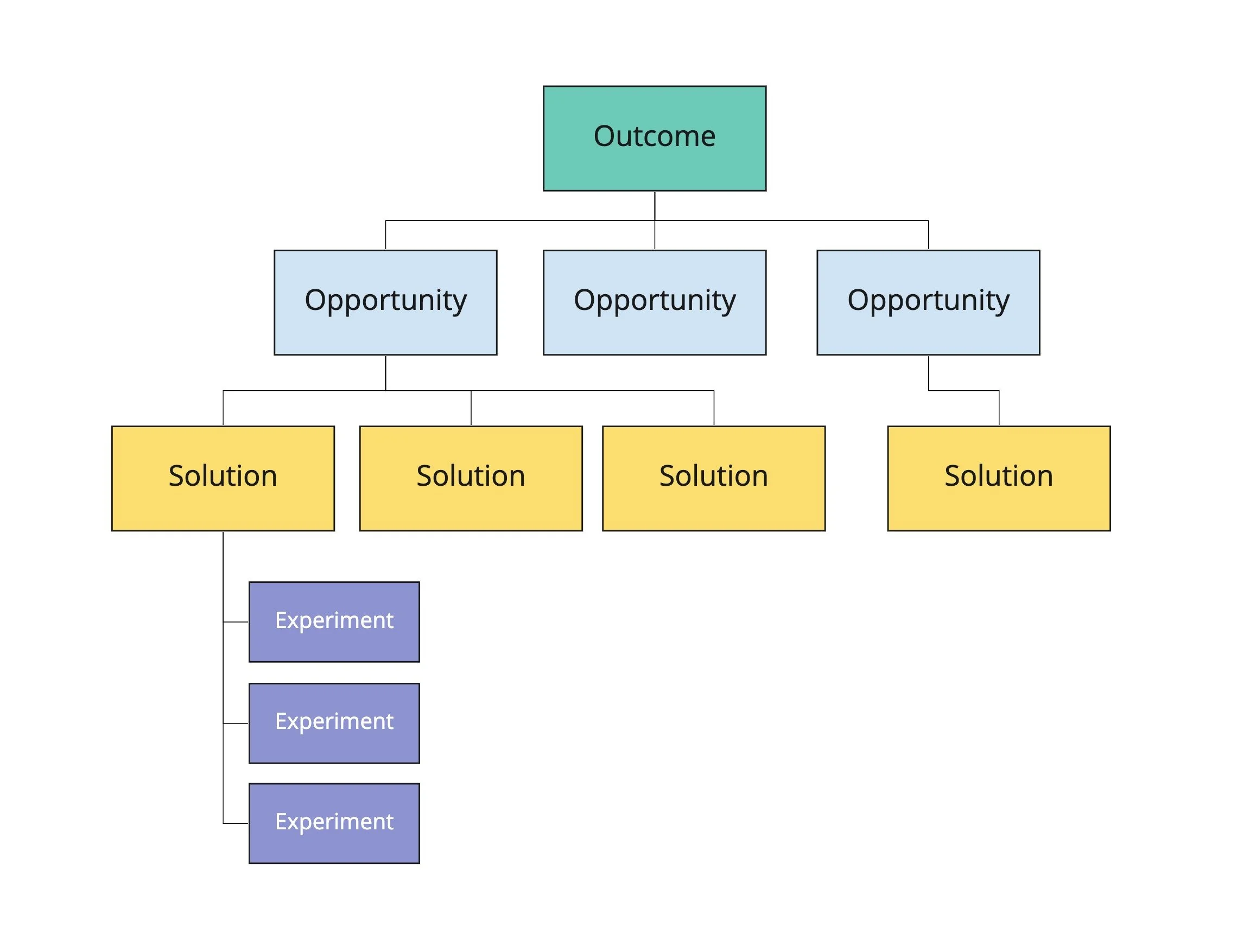

Opportunity solution tree mapping diagram (credit to Teresa Torres’ work)

4. Opportunity mapping/clustering together

I take what I found and run a 60 to 90 minute Miro workshop to map opportunities and decisions. I pull in my PM and analyst here to talk about what we need to prioritize in terms of impact/effort.

I use a modified Opportunity Solution Tree in Miro. I use Miro to generate a first-draft clustering of research insights by theme. I paste this into our 4x4 Priority Matrix as a starting point, then we rearrange and debate it together.

The tree gives us a simple way to see where the highest leverage work sits. It also makes it obvious when someone's pet feature doesn't actually ladder up to a customer outcome, which saves weeks of misaligned work.

5. Parallel concept sprints

I always create at least two tracks in Figma.

A simplified, optimized version of the existing flow (de-risked, shippable fast)

A more future-state, conceptual stretch version that explores what's possible if we rethink the model

This lets the team explore without losing sight of the practical path forward. I keep things mid-fidelity, components from our design system, real content, clear interaction notes, so decisions stay focused on the experience, not whether the button should be 8px or 12px rounded.

CarMax flows are data-heavy. We have a lot of vehicle condition descriptions, instant offer explanations, appraisal criteria messaging, so I’ll work with my UX writer to come up with compelling copy for these pieces, or ask Claude to help with some initial options to react to.

6. Fast validation

I test quickly and often. The goal is to remove bad ideas fast and double down on what's working.

What I run:

Short 20 to 30 minute moderated sessions with real CarMax customers (I work with my analyst to recruit from people who've recently gotten offers or are in the process of selling, or find folks on Fullstory who match the criteria)

Unmoderated tests for quick gut-checks on comprehension and task success

Realistic scenarios: "You're trying to sell your 2019 Honda CR-V. Walk me through what information you'd expect to provide before you make an appointment."

Where AI helps: After testing, I run session recordings through UserTesting.com/Teams summaries that tag moments by emotion (confusion, delight, frustration). This is still experimental, but it's faster than manually scrubbing through 8 hours of video looking for the moment someone said "wait, what does this mean?"

The goal is clarity, not perfection. I'm looking for deal-breakers, comprehension failures, and moments where cognitive load spikes. I'm not trying to validate every pixel.

7. Pre-dev detail pass

This is where I tighten everything before engineering starts building.

What I document in Figma:

Edge cases (What happens when the VIN doesn't match our database? What if someone enters an odometer reading that seems incorrect?)

Component inventory (Everything uses our design system, with variants already defined)

States: default, loading, error, empty, success

Acceptance criteria embedded in design file descriptions

Business rule logic written in plain language, with conditionals mapped visually

Where AI helps: I started using Claude to help me think through edge cases I might miss. I'll paste a flow description and say, "What edge cases should I be thinking about for a vehicle condition assessment flow where customers upload photos?" It usually surfaces 3 to 5 scenarios I hadn't considered—like lighting issues, missing license plates, or photos taken from the wrong angle.

I also am with engineering here, sharing prototypes, walking through logic, confirming feasibility, (and my devs are awesome!) nothing becomes a surprise during sprint planning.

8. Development partnership (Requirements, card building, and UAT/QA)

I stay close during the build, but not in the way that slows teams down.

How I stay involved:

Quick async messaging clarifications

Loom walkthroughs when something needs more context than a screenshot

Micro-reviews as features get built (I do a lot of UAT with my lead QA throughout the release cycle)

Visual QA to catch alignment, spacing, and interaction issues

Accessibility audits using Figma plugins and browser tools

This approach is lightweight and collaborative. It keeps quality high without blocking progress or creating a bottleneck where engineering is waiting on me to approve every commit.

9. Measuring analytics and pattern detection

After launch, I track how the experience performs in the real world.

What I monitor:

Funnel behavior (where are people dropping off compared to our hypothesis?)

Unexpected patterns (why are people abandoning the flow at the photo upload step?)

Support ticket volume and chatbot conversation themes

A/B test results if we're running an experiment

Fullstory session replay analysis for qualitative color

Where AI helps: I work with my analyst to export information as a .CSV, then I put it into Claude and ask it to flag anomalies or patterns I might miss. "Here's two weeks of post-launch data on our new appointment setting flow for [a specific customer segment]. What trends do you see?" It won't replace my analysis, but it often points me toward something worth investigating.

I create a short post-launch insights brief that guides the next iteration. This is where the next wave of opportunity usually shows up.

Why this works for me

This process gives me momentum and clarity. It matches how I naturally think and how CarMax actually operates.

It reduces unnecessary research by leveraging what we already know. It surfaces the right problems early, before engineering writes a line of code. It makes development smooth instead of combative. It helps teams make cleaner decisions with less time. It removes friction between roles because everyone sees the same picture in the same Miro board, the same Figma file, the same UserTesting clips.

It is structured, but not heavy. Clear, but not rigid. Flexible, but still grounded.

And critically, it uses AI where it actually helps, speeding up rote work like transcription, clustering, edge case generation, and surfacing hidden research with Copilot.

It works across the experiences I design most often at CarMax: instant offers, vehicle appraisals, checkout optimization, and anything involving reducing data entry friction.

What I'm still refining

I'm still evolving this process. A few areas I'm actively shaping:

Even faster validation loops (can I get quality feedback in 15 minutes instead of 30?)

Better ways to integrate Fullstory and real-time analytics into the design process, not just after launch (maybe an API/webhook opp here)

More sophisticated AI-assisted analysis so I don’t have to bug my analyst every time I need customer spreadsheets

Clearer frameworks for mapping data validation rules visually so engineering and operations can collaborate earlier

Reducing concept proliferation. I sometimes create too many options and overwhelm stakeholders

Final thoughts

The best UX process is the one you can actually use with real people in real conditions at real companies. This one works for me at CarMax! It creates clarity without slowing me or my team down. It gives enough structure to handle complexity and enough flexibility to move at speed.

This framework is constantly evolving! I'm building what works for the job I actually have: designing experiences that help people sell their cars efficiently with minimal data entry, in an environment where trust and speed matter equally, using tools like Miro, Figma, and UserTesting.

And I'll keep reshaping it as I learn.